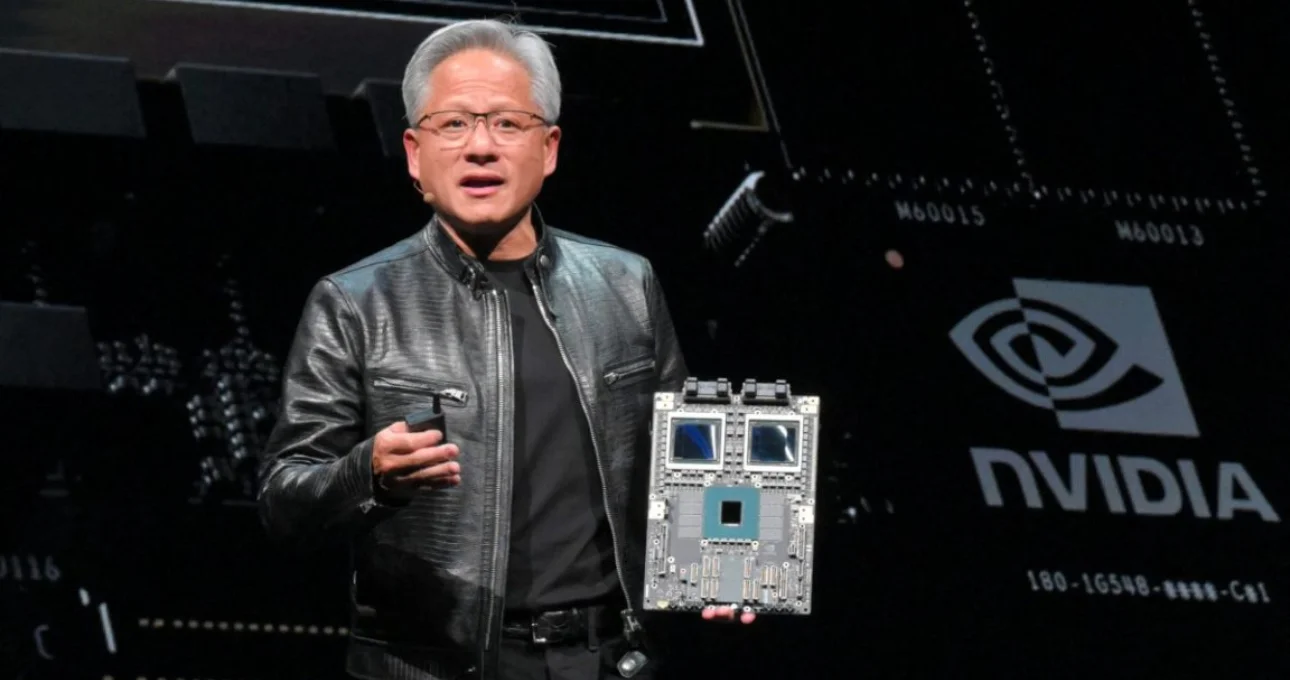

Nvidia has once again set the tone for the future of artificial intelligence infrastructure by unveiling Vera Rubin, its next-generation AI computing platform, at CES 2026. Positioned as the successor to the widely adopted Blackwell architecture, Vera Rubin represents more than an incremental performance upgrade. It marks a strategic shift toward rack-scale, system-level AI computing designed for the next wave of large-scale training and inference.

Rather than focusing solely on faster GPUs, Nvidia is rethinking how AI compute is deployed across entire data centers. Vera Rubin is built as a tightly integrated stack—combining CPUs, GPUs, high-speed interconnects, advanced networking, and embedded security into a unified platform. This approach aims to help hyperscalers and emerging “neocloud” providers scale AI workloads rapidly, without the costly and time-consuming process of redesigning infrastructure for each new hardware generation.

Moving Beyond the GPU-Centric Era

For years, Nvidia’s dominance in AI has been anchored in its GPU leadership. With Vera Rubin, the company is expanding that vision to a holistic, rack-scale architecture. The goal is to treat the data center itself as the computing unit, optimized end-to-end for AI workloads.

This shift reflects a growing reality in AI development. Training frontier models and running large-scale inference is no longer limited by raw compute alone. Bottlenecks increasingly arise in data movement, networking latency, power efficiency, and security. Vera Rubin addresses these challenges by designing the entire system to work in concert, rather than as a collection of loosely connected components.

Built for Hyperscalers and Neoclouds

One of Nvidia’s key messages at CES was speed of deployment. Vera Rubin is designed so cloud providers can roll out new AI capacity without reengineering their data centers from the ground up. By standardizing compute, networking, and interconnects at the rack level, Nvidia is offering a blueprint that can be replicated at scale.

This is particularly appealing to hyperscalers and a new class of AI-focused infrastructure providers often referred to as neoclouds. These companies are racing to meet surging demand for AI compute while keeping capital and operational costs under control. Vera Rubin’s integrated design promises faster time-to-production and more predictable performance across deployments.

Training and Inference at a New Scale

Vera Rubin is engineered to support both massive AI training runs and increasingly complex inference workloads. As AI models grow larger and more multimodal, inference is becoming just as compute-intensive as training. Nvidia’s architecture reflects this shift, optimizing for continuous, high-throughput AI operations rather than sporadic training cycles.

The platform also anticipates future demands around real-time AI services, where latency, reliability, and security are critical. By embedding these considerations directly into the architecture, Nvidia is positioning Vera Rubin as a long-term foundation for AI services across industries.

Early Rollout Signals Confidence

Nvidia’s decision to roll out Vera Rubin early underscores its confidence in the platform and its desire to stay ahead of competitors. By giving partners and customers early access, Nvidia is enabling ecosystem alignment—from software optimization to data center planning—well before the platform reaches full-scale adoption.

This proactive approach has been a hallmark of Nvidia’s strategy, allowing it to shape industry standards rather than react to them.

Redefining the Next AI Compute Wave

With Vera Rubin, Nvidia is betting that the next phase of AI innovation will be defined by system-level efficiency, not just raw performance. The company’s message is clear: the future of AI compute lies in integrated, scalable platforms that simplify deployment while maximizing throughput.

As AI continues to reshape industries, Vera Rubin positions Nvidia not just as a chipmaker, but as the architect of the AI data center itself. In doing so, Nvidia is setting the pace for the next generation of AI computing—one rack at a time.